The theorems and corollaries above are intended for use as

schedulability tests. They can be applied directly to prove that

a task set will meet deadlines with DM or RM scheduling, either

before run time for a fixed set of tasks or during run time as an

admission test for a system with a dynamic set of tasks. With the

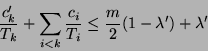

simpler forms, one computes ![]() and then checks the

schedulability condition once for the entire task set. With the

more general forms, one checks the schedulability condition for

each task. In the latter case the specific value(s) of

and then checks the

schedulability condition once for the entire task set. With the

more general forms, one checks the schedulability condition for

each task. In the latter case the specific value(s) of ![]() for

which the test fails provide some indication of where the problem

lies.

for

which the test fails provide some indication of where the problem

lies.

The schedulability tests of Theorems 12 and

18 allow preperiod deadlines, but are more complicated

than the corresponding utilization bound tests. It is natural to

wonder whether this extra complexity gains anything over the well

known technique of ``padding'' execution times and then using the

utilization bound test. By padding the execution times we mean

that if a task ![]() has execution time

has execution time ![]() and deadline

and deadline

![]() , we replace it by

, we replace it by ![]() , where

, where

![]() and

and ![]() .

.

With deadline monotonic scheduling, the original task ![]() can be scheduled to meet its deadline if the following condition

holds for

can be scheduled to meet its deadline if the following condition

holds for ![]() :

:

There are cases where this test is less accurate than that of

Theorem 18. Suppose we have three processors and three

tasks, with periods ![]() , deadlines

, deadlines ![]() ,

,

![]() , and compute times

, and compute times

![]() . Obviously, the

tasks are schedulable. However, if we apply the padded

utilization test to

. Obviously, the

tasks are schedulable. However, if we apply the padded

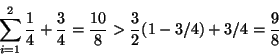

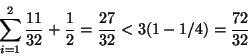

utilization test to ![]() , we have

, we have

![]() , and

, and

![]() . The test fails, as follows:

. The test fails, as follows:

A similar padding technique can be applied for EDF, but again it is sometimes less accurate than Theorem 12.

Of course, these schedulability tests are only sufficient

conditions for schedulability. They are like the Liu and Layland

![]() utilization bound, both in being very

conservative and in still having practical value.

utilization bound, both in being very

conservative and in still having practical value.

Though the utilization tests are not tight in the sense of being

necessary conditions for schedulability, Goossens, Funk, and

Baruah[8] showed that the EDF bound is tight in the

sense that there is no utilization bound

![]() , where

, where ![]() and

and

![]() , for which

, for which

![]() guarantees EDF schedulability. Since the EDF

utilization bound is tight it is natural to wonder whether the

same is true of the RM bound. The following threorem shows that

it is not tight.

guarantees EDF schedulability. Since the EDF

utilization bound is tight it is natural to wonder whether the

same is true of the RM bound. The following threorem shows that

it is not tight.

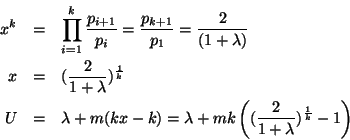

Proof:

The task set and analysis are derived from Liu and Layland[13].

The difference is that here there are ![]() processors instead of one, and the utilization of the

longest-period task is bounded by a

processors instead of one, and the utilization of the

longest-period task is bounded by a ![]() .

.

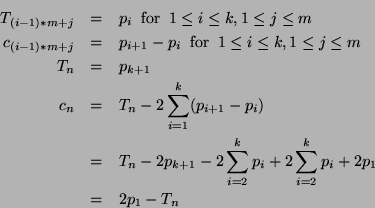

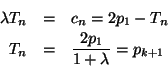

The task set contains ![]() tasks where

tasks where ![]() is an arbitrary

integer greater than or equal to 1. The task

execution times and periods are defined

in terms of a set of parameters

is an arbitrary

integer greater than or equal to 1. The task

execution times and periods are defined

in terms of a set of parameters

![]() as follows:

as follows:

These constraints guarantee that task ![]() barely has time to

complete if all

barely has time to

complete if all ![]() tasks are released together at time zero. The

RM schedule will have all

tasks are released together at time zero. The

RM schedule will have all ![]() processors busy executing tasks

processors busy executing tasks

![]() for

for

![]() out of the

out of the ![]() available time units,

leaving exactly

available time units,

leaving exactly ![]() units to complete

units to complete ![]() .

.

If

![]() , we have

, we have

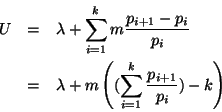

We will choose

![]() to minimize the total utilization, which is

to minimize the total utilization, which is

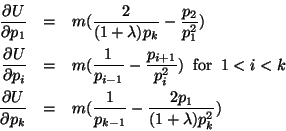

The partial derivatives of ![]() with respect to

with respect to ![]() are

are

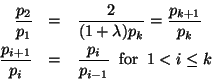

Since the second partial derivatives are all positive, a unique global minimum exists when all the first partial derivatives are zero. Solving the equations above for zero, we get

Let

![]() . It follows that

. It follows that

L'Hôpital's Rule can be applied to find the limit of the above expression

for large ![]() , which is

, which is

![]() .

.

![]()

We conjecture that the upper bound on the minimum achievable RM utilization achieved by the example above may be tight.

Srinivasan and Baruah[18] and Andersson, Baruah, and

Jonsson[2] showed how to relax the restriction that

![]() in the utilization tests, for situations

where there are a few high-utilization tasks. They

propose EDF and RM versions of a hybrid scheduling policy called

EDF/US[

in the utilization tests, for situations

where there are a few high-utilization tasks. They

propose EDF and RM versions of a hybrid scheduling policy called

EDF/US[![]() ], where

], where

![]() for EDF

and

for EDF

and

![]() for RM.

for RM.

EDF/RM-US[![]() ]:

]:

(heavy task rule)

If

![]() then schedule

then schedule ![]() 's

jobs at maximum priority.

's

jobs at maximum priority.

(light task rule)

If

![]() then schedule

then schedule ![]() 's

jobs according to their normal EDF or RM priorities.

's

jobs according to their normal EDF or RM priorities.

They then proved two theorems, which we paraphrase and combine as follows:

The proof is based on the observation that the upper bound on

total utilization guarantees that the number of heavy tasks cannot

exceed ![]() . The essence of the argument is that the algorithm

can do no worse than scheduling each of the heavy

tasks on its own processor, and then scheduling the remainder (which

must must be light on the remaining processors) using the regular

EDF or RM algorithm.

. The essence of the argument is that the algorithm

can do no worse than scheduling each of the heavy

tasks on its own processor, and then scheduling the remainder (which

must must be light on the remaining processors) using the regular

EDF or RM algorithm.

The above result can be generalized slightly, as follows:

Proof:

As argued by Srinivasan and Baruah, the

performance of this algorithm cannot be worse than an algorithm that

dedicates one processor to each of the heavy tasks, and uses EDF or RM to

schedule the remaining tasks on the remaining processors.

The utilization bound theorem then guarantees the remaining tasks

can be scheduled on the remaining processors.

![]()

If there is a need to support preperiod deadlines, this idea can be taken further, by changing the ``heavy task rule'' to single out for special treatment a few tasks that fail the test conditions of one of our schedulability tests that allows preperiod deadlines, and run the rest of the tasks using EDF or DM scheduling.