| Real Time Systems: Notes |

These notes make use of mathematical symbols that may not be visible with the default settings on some versions of some browsers.

| Symbol | Symbol Name | Symbol | Symbol Name | Symbol | Symbol Name |

|---|---|---|---|---|---|

| ∼ | sim | ∑ | sum | ⌈ | lceil |

| ⌉ | rceil | ⌊ | lfloor | ⌋ | rfloor |

| → | rarr | ⇒ | rArr | ∞ | infin |

| < | lt | ≤ | le | > | gt |

| ≥ | ge | ≠ | ne | δ | delta |

| Δ | Delta | ∂ | part | τ | tau |

| π | pi | φ | phi |

If a blank space appears to the left of any of the names in the table above, or the symbol looks strange, try changing the font settings on your browser or using a different browser.

This analysis is based on the following assumptions:

The original paper on this topic, by Liu & Layland, coined the term Rate Monotone scheduling, but made the assumption that the relative deadline of each task is the same as its period. Under that condition, RM and DM scheduling are equivalent. Later research pointed out relaxed the assumption that relative dealine equal period, and showed that what really matters is the relative deadline.

People still sometimes use the terms "Rate Monotone" and "Rate Monotonic" (RM) loosely, to include both RM and DM scheduling, since most of the results for RM scheduling have been shown to also apply to DM scheduling.

Theorem: A system of simply periodic, independent, preemptable tasks whose relative deadlines are not smaller than their periods is schedulable on one processor according to the RM algorithm if and only if its total utilization is less than or equal to one.

Here, a system of tasks is simply periodic if for every pair of tasks such that Ti < Tk, Tk is an integer multiple of pi. Such a system is sometimes said to be harmonic.

Theorem 6.4 (J. Liu): A system of periodic independent preemptable tasks that are in phase and whose relative deadlines are less than or equal to their periods is schedulable on one processor according to the DM algorithm whenever it can be scheduled according to any fixed-priority algorithm.

J. Liu leaves the crucial part of the proof of this theorem as an exercise for the reader. It is probably a good thing for you do do that exercise.

The in-phase restriction can later be dropped, using the critical instant theorem.

Notation::

In the original L&Lpaper, τi is the name of a task, Ti is the task's period, and Ci is the task's compute (execution) time. We use that notation for the following.

A critical instant for a task is defined to be an instant at which a request for that task will have the largest response time

A critical time zone for a task is the time interval between a critical instant and the end of the reponse to the corresponding request of the task.

Theorem: A critical instant for any task occurs whenever the task is requested simultaneously with requests for all higher priority tasks.

The definitions and theorem above are stated in the words of L & L. They may seem rather imprecise. More precise definitions will be given later.

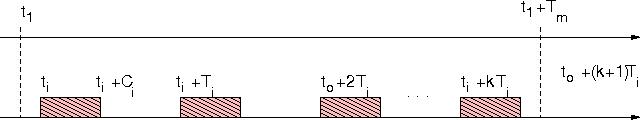

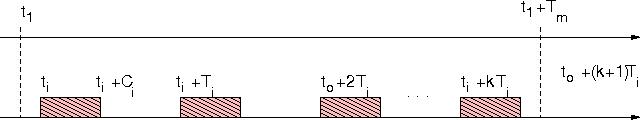

Proof: Let τ1, τ2, ... τm denote a set of priority-ordered tasks with τm being the task with the lowest priority. Consider a particular request for τm that occurs at time t1. Suppose that between t1 and t1+Tm, the time at which the subsequent request of τm occurs, requests for task τi , i < m, occur at ti, ti+Ti, ... ti+kTi , as illustrated in Figure 1.

Clearly, the preemption of τm by τi will cause a certain amount of delay in the completion of the request for τm that occurred at t1, unless the request for τm is completed before ti.

Moreover, from the figure above we see immediately that advancing the request time t2 will not speed up the completion of τm. The completion time of τm is either unchanged or delayed by such advancement. Consequently, the delay in the completion of τm is largest when t2 coincides with t1.

Repeating the argument for all τk, k = 2, ... m-1, we prove the theorem.

The diagram, notation, and wording are exactly as in the published paper. In particular, the notation Ci is used for execution time and Ti is used for the period of task τi.

The statement that we are supposed to see immediately above is not so obviously true, as the example below illustrates. Consider the following task set.

| i | Ci | Ti |

|---|---|---|

| 1 | 10 | 50 |

| 2 | 20 | 60 |

| 3 | 30 | 70 |

The diagram below illustrates how advancing the request time t2 to t1 will speed up the completion of t3 (unless we also simultaneously move up the request time of t1).

To avoid this fallacy, we need to look at a longer interval, which we call the busy interval leading up to the critical instant.

A critical instant of a task τi is a time instant t (in a particular schedule for a task set containing τi) such that

Theorem 6.5: In a fixed priority (preemptive) scheduling system where every job completes before the next job in the same task is released, a critical instant of any task τi occurs when one of its jobs Ji,c is released at the same time with a job in every higher priority task, i.e., ri,c = rk,x for some x for each k = 1, 2, ... i-1.

The Critical Instant Theorem is sometimes called the Critical Zone Theorem.

A consequence of this theorem is that RM/DM scheduling is optimal among fixed-priority scheduling policies for periodic task sets.

That is a direct consequence of Theorem 6.4, since Theorem 6.5 has shown that the worst-case response time of any task is achieved when all the tasks are in phase.

Suppose t is a critical instant for τi in some schedule, at which a request for Ji,c achieves the maximum response time for τi or exceeds its deadline.

We will show that this schedule can be transformed to one in which there is a critical instant that satisfies the conditions of the theorem.

Consider the level- πi busy interval relative to the priority of τi leading up to time t+ri,c. This is the longest interval [t',t+ri,c] in which there is no idle time and no task of priority lower than τi executing.

If we truncate the schedule to start with the task release that occurs at time t', shifting all the times down accordingly, the response time of Ji,c will be unchanged. Thus, without loss of generality we can assume we have a critical instant of this form.

After this truncation, the processor is busy between times 0 and t-t' executing jobs of tasks priority at least as high as τi, and Ji,1 is requested at the critical instant φi.

We will call the values φk the "phasings" of the respective tasks, since they represent the offset of the first release of each task from zero.

Let Wi,1 be the response time of Ji,1.

Let τk be any task that has higher priority than τi, and let φk be the release time of the first job of τk.

From φk to φi+Wi,1 at most ⌈ (Wi,1+φi-φk)/Tk ⌉ jobs of τk are released.

Each of these demands Ck units of processor time.

Thus, the total demand for processor time from τi and all the jobs of higher priority that are requested in the interval [0,t), for any value of t up until the point where τi completes, is is wi(t) where t = φi+ Wi,1 and

| wi(t) = Ci + |

|

This is not just an upper bound. It is exactly how much must be done in this interval in order to Ji,1 to complete. Since all the other jobs have higher priority than Ji,1, it can only complete if after all the higher priority jobs have completed. That is, for Ji,1 to complete at time Wi,1 +φi we must have

|

It follows that Wi,1 can be computed as t - φi for the minimum value t in the interval [0,Ti) that satisfies the equation

To see that this is true, observe that the function wi(t) is a staircase function of t, that lies above the supply line y(t) = t until it intersects. The point of intersection is the minimum solution of the above recurrence. This is illustrated in the following figure, in which the point of intersection is indicated by a circle.

The value of Wi,1 clearly depends on φi and φk for k = 1, ... i-1. We want to know how large Wi,1 can be, in the worst case, over all possible task phasings. The way to do this is to analyze how changing the phase of each task affects the value of Wi,1.

Wi,1 is the first point at which the function

|

If we decrease

φk for any

k in the range 1...i-1 that can only increase the value of

|

The solid blue line is the original step function, with nonzero φk and the dashed redish brown line is the step function with the phase φk reduced to zero. The diagram shows how reducing the phase to zero can push the point of completion out further on the time axis.

It follows that Wi,1 is maximized when φk is zero for every k = 1,2,...i-1.

Similarly, decreasing φi increases the value of t -φi, so Wi,1 is maximized when maximized when φk is zero for every k = 1,2,...i.

In summary, we have shown that the response time is maximized when φk = 0 for every k = 1,2,...i, and that maximum response time of τi is the smallest value of t such that wi(t) = t, where

|

If there is no solution, the job is not schedulable.

The function wi(t) is called the time-demand function for task τi.

The minimum solution is usually called the least fixed point of a recurrence.

For each value t = jTk, for k = 1, 2, ...i and j = 1, 2, ... ⌊ min{Ti,Di}/Tk ⌋, verify that wi(t) ≤ t.

If this inequality is satisfied at any of these instants, then τi is schedulable.

The time complexity of this test is O(nqn,1), where qn,1 is the ratio of the period ratio of the system.

The test just looks at points where jobs of tasks with higher priority than τi would be released, if all tasks were started at time zero.

| © 1998, 2003 T. P. Baker. ($Id: rmscheduling.html,v 1.3 2008/08/25 11:18:48 baker Exp baker $) |